Risk management starts with identifying and assessing the likelihood of bad situations occurring. Living through a pandemic, everyone now has had a crash course in risk management. Should I go to this restaurant tonight? If I go out to this restaurant tonight, what is the likelihood that I’ll get COVID. And if I do get it, how bad would that be?

As in their personal lives, security professionals apply risk management to countless areas of security to effectively answer the question: How critical is this issue? And how urgently must I address it? With finite time and resources, we know effective risk management is the foundation for being able to stay ahead of the next breach. As we review vulnerabilities from our network scans, bugs in our code, incidents in our triage queue, or the list of audit findings, security leaders are forced to prioritize how to apply our resources, deploy mitigating controls, or accept the risk in order to keep our organizations as secure as reasonably possible.

In order to make these decisions effectively, a level of precision is necessary about the risk we are contemplating. To get this precision, it is helpful to unpack the equation for Risk as:

Risk = Likelihood x Consequence

For instance, what is the likelihood that the vulnerability will get exploited on my unpatched server, and what is the consequence if it does?

To answer these questions, the security industry has developed risk management frameworks that help prioritize and triage our vulnerabilities:

- Common Vulnerability Scoring System (CVSS) is used to evaluate the threat level of a vulnerability

- CVE is a glossary that classifies vulnerabilities.

- Common Weakness Enumeration (CWE) is a category system for hardware and software weaknesses and vulnerabilities

Similar company-specific categorization exists for incident handlers and for audit findings. But when it comes to our users, security teams have thrown everything we’ve learned about risk management out the door. Instead of managing user-risk with any granularity, we lump all employees into one bucket. Or, we categorize them solely by department, role, location, or some other easy to determine, yet fairly useless characteristic. Worse, we often apply the same level of controls across the entire population hoping to tamp down risk uniformly.

If we took this approach in other security disciplines, every incident would be a P2 and every unpatched machine would be Medium severity, thereby forcing security teams to over invest in some risk and grossly under prioritizing the most critical ones.

Applying the same controls to every person, asset, or finding is a mismanagement of risk and an overinvestment in controls.

User Risk is the One Area that Needs Effective Risk Management More Than Any Other in Security

In 2021, the human element was responsible for over 82% of all breaches according to the Verizon DBIR. Clearly, we have not found an effective way of securing our workforce against the onslaught of cyberattacks and continue to see the failed impact of one-size-fits all remediations such as training in securing our workforce.

If we are to start applying risk management techniques to our workforce, we need the precision of understanding how likely each individual is to make a security mistake and what is the impact of this mistake. We need to evolve our user-risk management to a place where it can define the risk level of each individual and allow us to manage that risk accordingly.

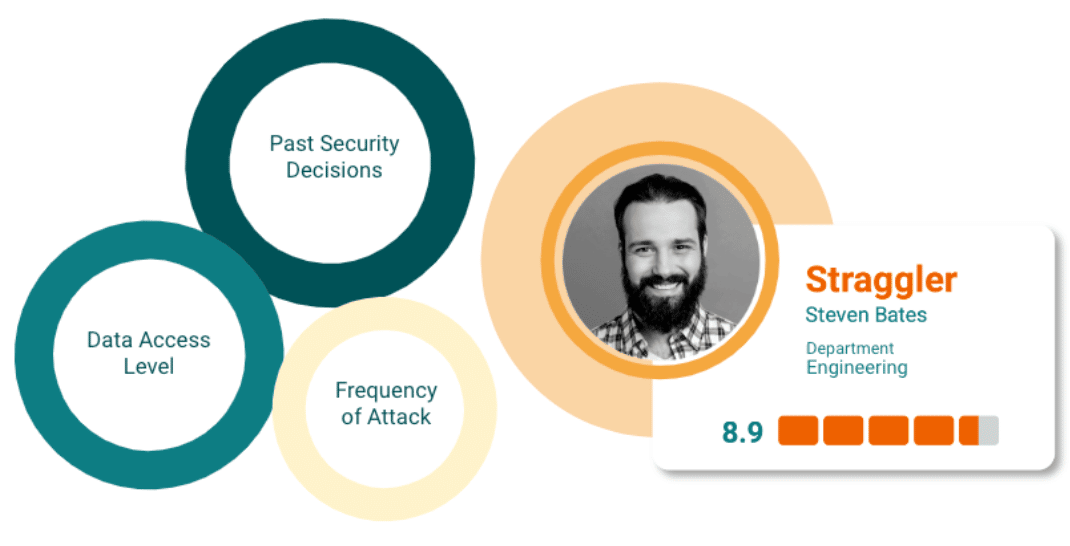

Different employees have different levels of security ‘common sense.’ Some are attacked more frequently than other peers, or have greater access and privilege. Understanding these risk characteristics for each individual in our workforce will let us see user-risk in granular detail. Pulling these risk factors together, it is possible to create a security score, quite similar to a common credit score, allowing us to quickly understand the overall risk level of each individual.

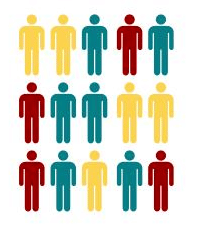

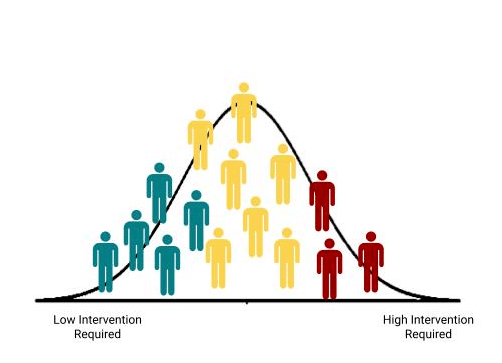

Leveraging our extensive risk knowledge database, representing over 20B data points, Elevate defines the standard distribution of risk across an enterprise workforce. We know 6% of a workforce is high risk, and nearly 90% of the incidents are caused by this tiny cohort. We also know 55% of a workforce are born security superstars with little inherent risk, and the last 37% have varying degrees of medium risk.

Clearly, each of these populations need a different type of intervention to help mitigate the risk that they pose to the organization.

By applying the right controls to the right people, security teams can focus their resources and optimize both productivity and security. Users with less risk can be given a degree of freedom in access and enablement. Risky users benefit from enhanced controls to minimize their risk, while the rest of the less risky population continues to enjoy high productivity.

What’s more, by quantifying individual risk, security teams can more easily justify these limited protective measures, which reduces tension among business units, managers, and employees themselves. With risk management, security teams can protect the business more effectively, support productivity, and enable talent to be as innovative as possible.